In recent years, there has been a significant advancement in the field of Artificial Intelligence (AI) and Augmented Reality (AR). These technologies have become increasingly popular and have the potential to enhance virtual experiences in various fields such as gaming, education, healthcare, and...

Algorithm Helps Deaf Musicians Feel Rhythm Through Vibrations

Music has always been considered an auditory art form, seemingly inaccessible to those who cannot hear. Yet throughout history, deaf musicians have found ways to experience and create music, often by feeling vibrations through floors, instruments, and their own bodies. Now, a sophisticated algorithm developed by researchers specializing in haptic technology is revolutionizing how deaf musicians interact with music, translating complex rhythmic information into precisely calibrated vibration patterns that convey the full richness of musical timing and feel.

Understanding the Challenge

For hearing musicians, rhythm feels intuitive. The brain processes audio signals and naturally extracts beat, tempo, and timing information. Deaf musicians have historically relied on visual cues from conductors or fellow performers, along with whatever vibrations they could perceive naturally. While effective to some degree, these methods often lack the precision and immediacy that hearing musicians take for granted.

The Limitations of Natural Vibration

Simply amplifying sound and transmitting it as vibration proves insufficient for musical performance. Low frequencies travel well through solid materials, but higher frequencies that carry crucial rhythmic information often get lost. Moreover, environmental vibrations create noise that obscures the musical signal. The new algorithm addresses these challenges through intelligent signal processing and targeted delivery.

How the Technology Works

The system comprises three main components working together to deliver meaningful musical information through touch. Each component has been carefully designed to maximize the clarity and usefulness of the transmitted vibrations.

Audio Analysis Engine

The algorithm begins by analyzing incoming audio in real-time, extracting rhythmic elements including:

- Beat locations and tempo variations

- Accent patterns and dynamic emphasis

- Rhythmic subdivisions and syncopation

- Instrument-specific attack characteristics

- Ensemble timing relationships

Haptic Translation Layer

Extracted rhythmic information passes through a translation layer that converts audio characteristics into vibration parameters. The algorithm maps musical dynamics to vibration intensity, converts timing information into precise pulse sequences, and assigns different instruments or musical elements to distinct vibration patterns that users can learn to distinguish.

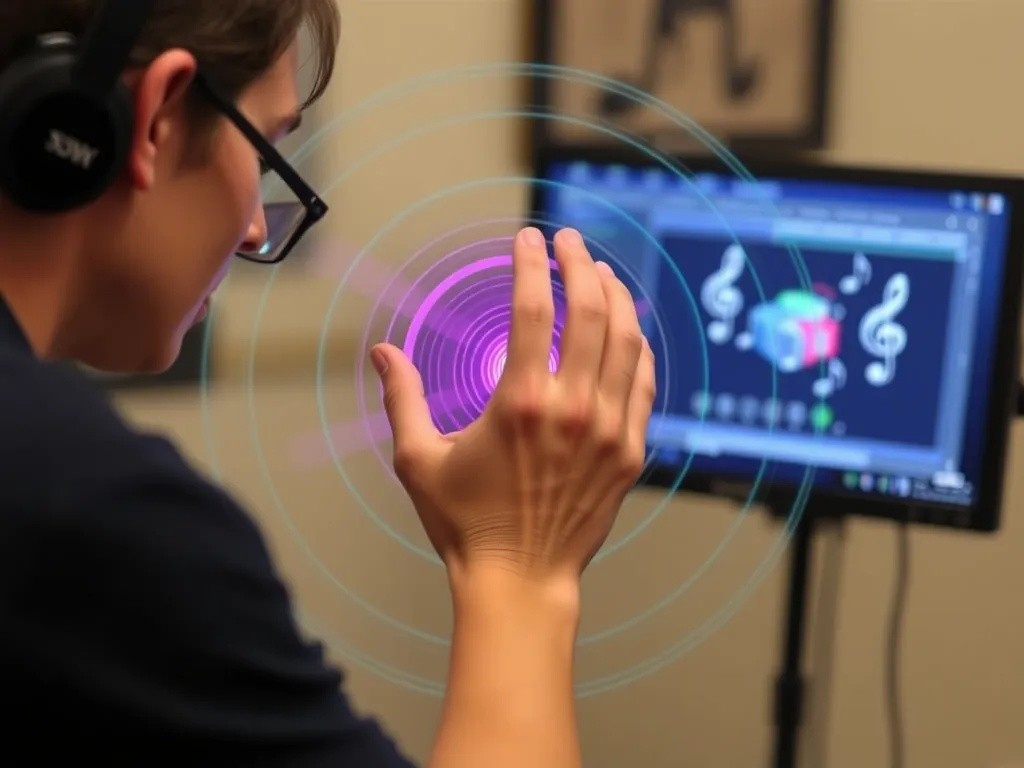

Wearable Delivery System

The vibrations reach the musician through specialized wearable devices. Current implementations include wristbands, vests, and floor pads that deliver calibrated haptic feedback to different body areas. Research has shown that distributing vibrations across multiple contact points enhances the perception of complex rhythmic patterns.

Impact on Deaf Musicians

Musicians who have tested the system report transformative experiences. Many describe feeling truly connected to the music for the first time, able to perceive subtleties of rhythm and timing that previously remained invisible. The technology has enabled participation in ensemble performance at unprecedented levels of synchronization.

Educational Applications

Music educators working with deaf students have embraced the technology as a teaching tool. Students can now feel the difference between correct and incorrect rhythm, receiving immediate feedback during practice sessions. This capability accelerates learning and enables more precise instruction in rhythmic concepts that were previously difficult to communicate.

Professional Performance

Several professional deaf musicians have incorporated the system into their performance practice. Orchestra musicians use it to stay synchronized with conductors and fellow performers. Solo performers connect to backing tracks or accompanists with newfound precision. The technology has opened doors to performance contexts that were previously challenging for deaf musicians to navigate.

Broader Implications

Beyond its immediate applications for deaf musicians, the technology raises interesting questions about the nature of musical perception. Researchers have discovered that hearing musicians also benefit from haptic rhythm feedback, suggesting that tactile perception of music offers unique advantages regardless of hearing ability.

The algorithm continues to evolve as developers gather feedback from users and expand the system's capabilities. Future versions may incorporate melody and harmony information alongside rhythm, potentially creating entirely new ways to experience music through touch. For now, the technology stands as a powerful example of how thoughtful engineering can remove barriers and expand human creative potential.